Learning to Dexterously Pick or Separate Tangled-Prone Objects for Industrial Bin Picking

Xinyi Zhang1, Yukiyasu Domae2, Weiwei Wan1, Kensuke Harada1,2

1Osaka University, 2National Institute of Advanced Industrial Science and Technology (AIST)

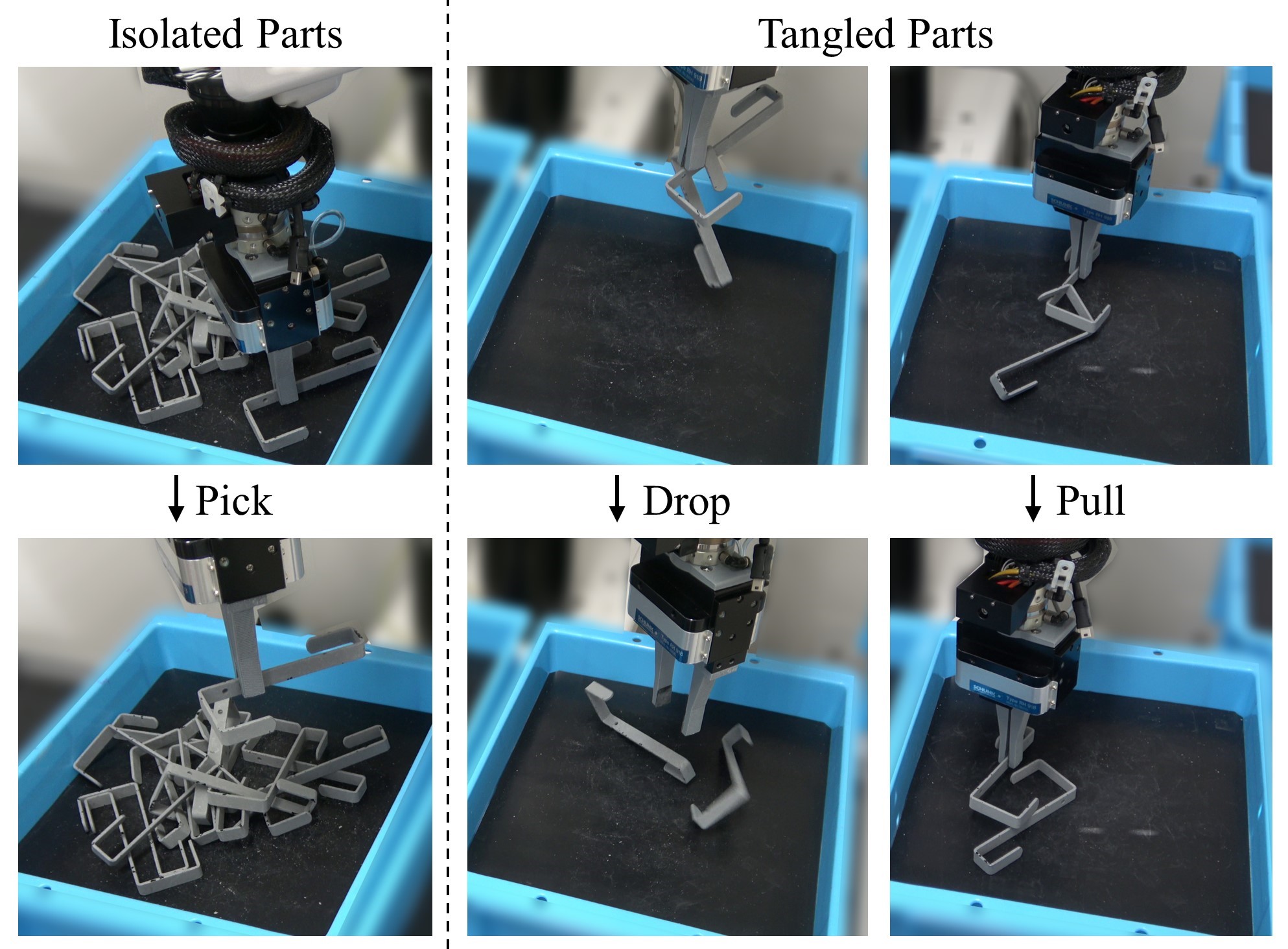

Industrial bin picking for tangled-prone objects requires the robot to either pick up untangled objects or perform separation manipulation when the bin contains no isolated objects. The robot must be able to flexibly perform appropriate actions based on the current observation. It is challenging due to high occlusion in the clutter, elusive entanglement phenomena, and the need for skilled manipulation planning. In this paper, we propose an autonomous, effective and general approach for picking up tangled-prone objects for industrial bin picking. First, we learn PickNet - a network that maps the visual observation to pixel-wise possibilities of picking isolated objects or separating tangled objects and infers the corresponding grasp. Then, we propose two effective separation strategies: Dropping the entangled objects into a buffer bin to reduce the degree of entanglement; Pulling to separate the entangled objects in the buffer bin planned by PullNet - a network that predicts position and direction for pulling from visual input. To efficiently collect data for training PickNet and PullNet, we embrace the self-supervised learning paradigm using an algorithmic supervisor in a physics simulator. Real-world experiments show that our policy can dexterously pick up tangled-prone objects with success rates of 90%. We further demonstrate the generalization of our policy by picking a set of unseen objects.

Paper

Latest version: here (with supplementary material)

IEEE Robotics and Automation Letters (RA-L), 2023.

Code is available on Github.

Supplementary Video

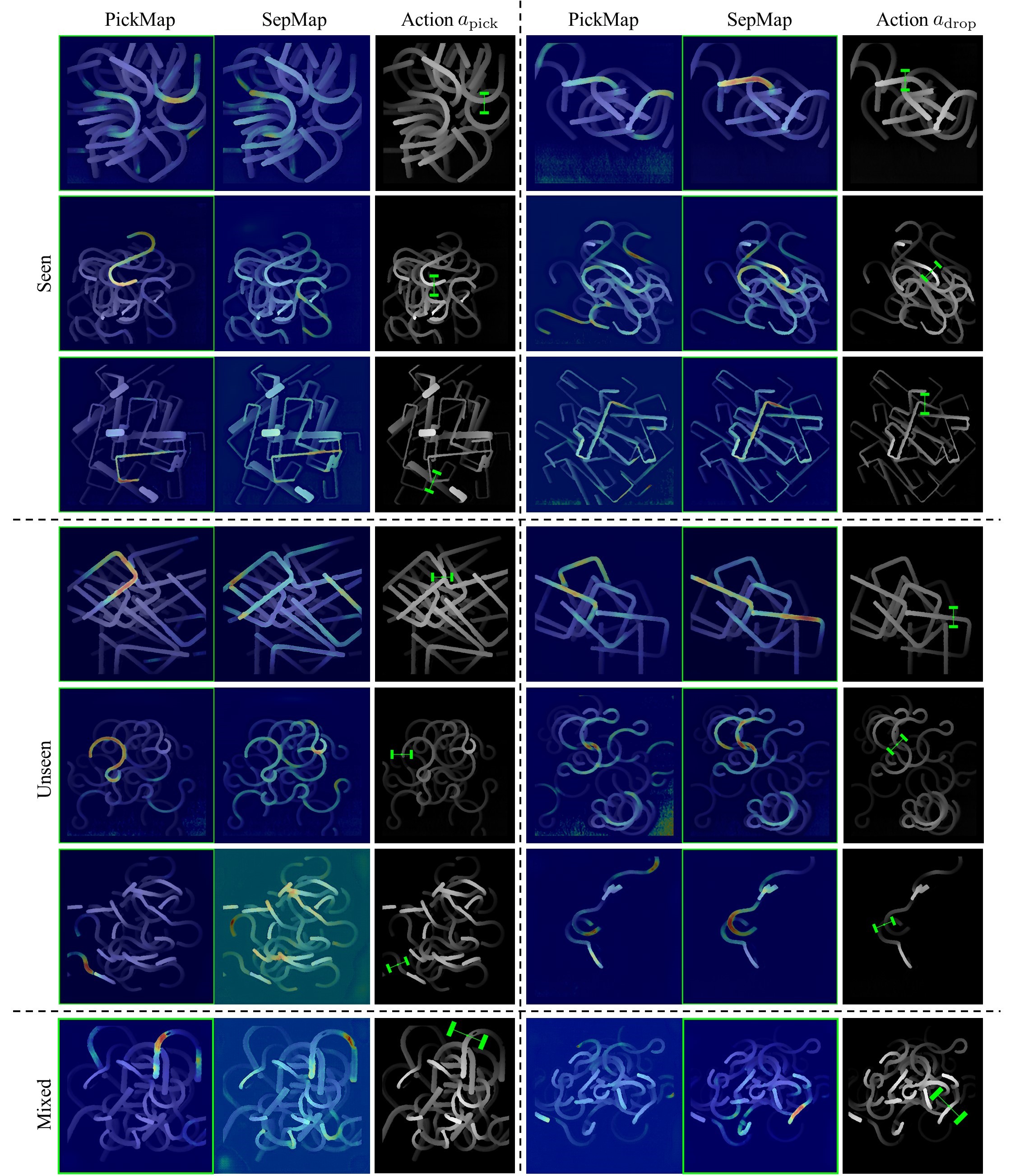

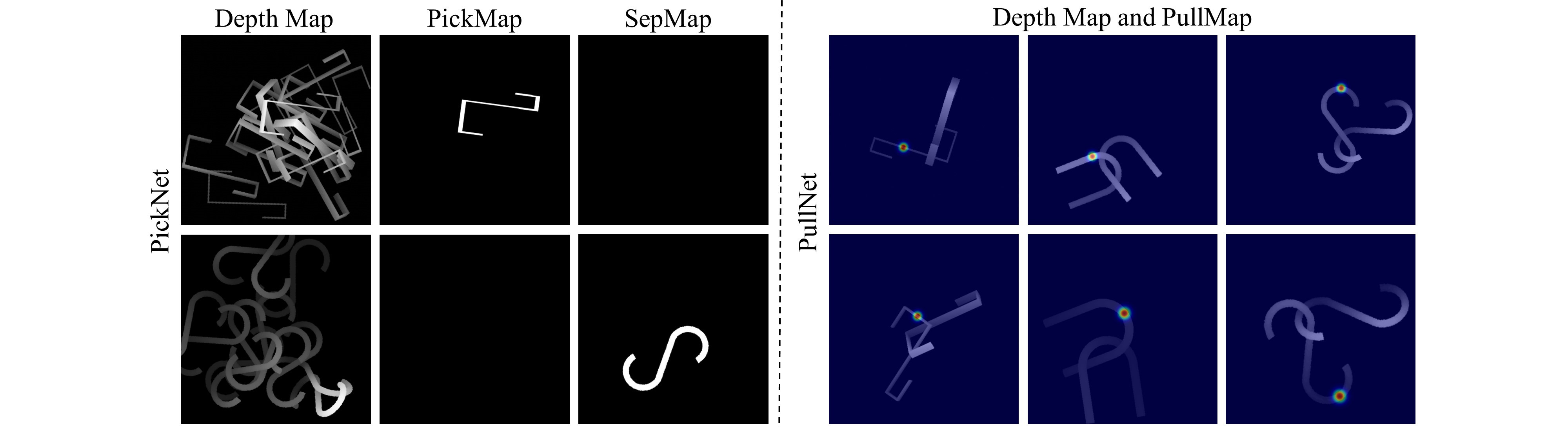

Results of predicting isolated/tangled objects using PickNet

PickNet outputs two heatmaps: PickMap and SepMap, respectively indicate the grasp affordance of isolated or tangled objects in the clutter.

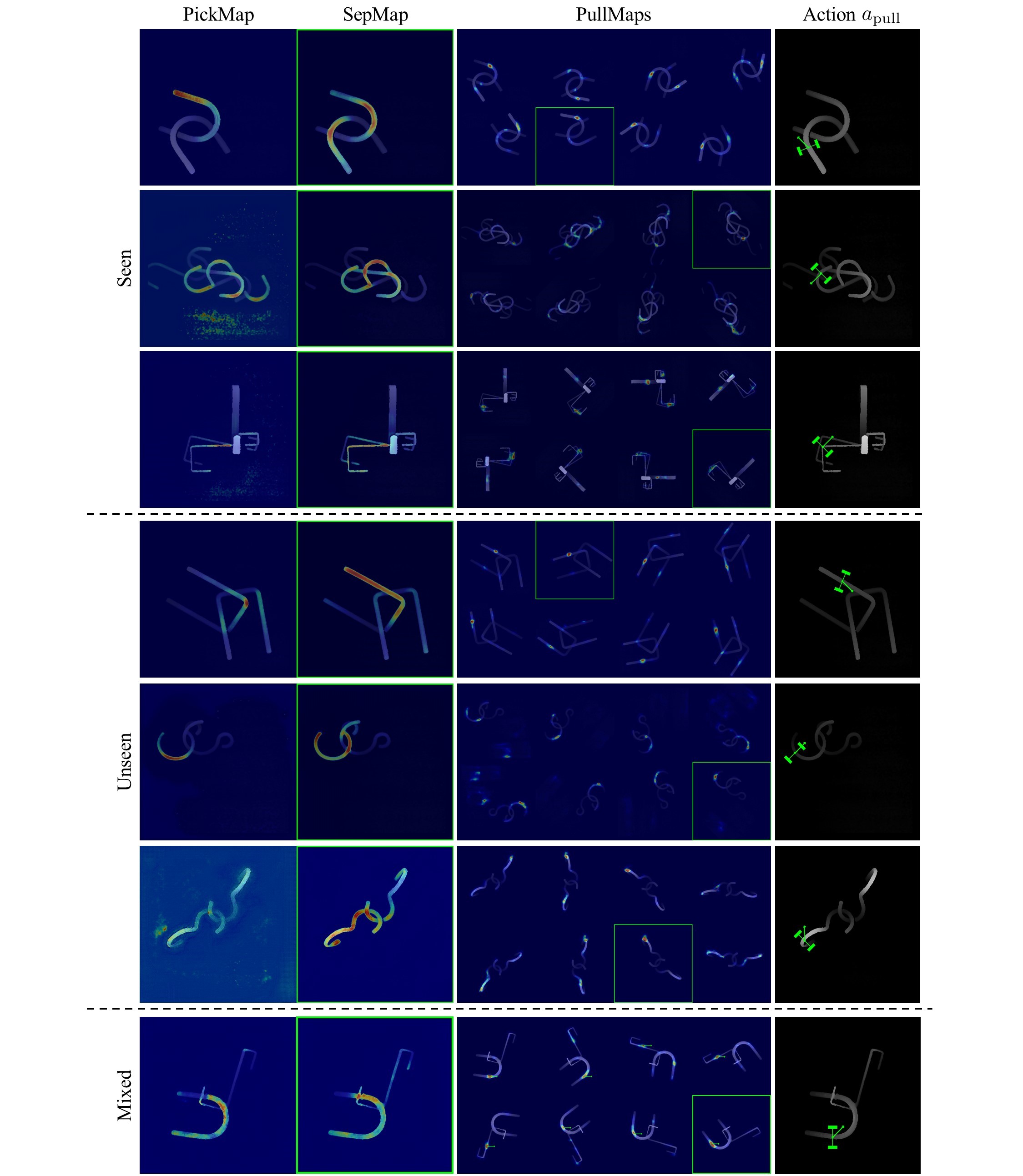

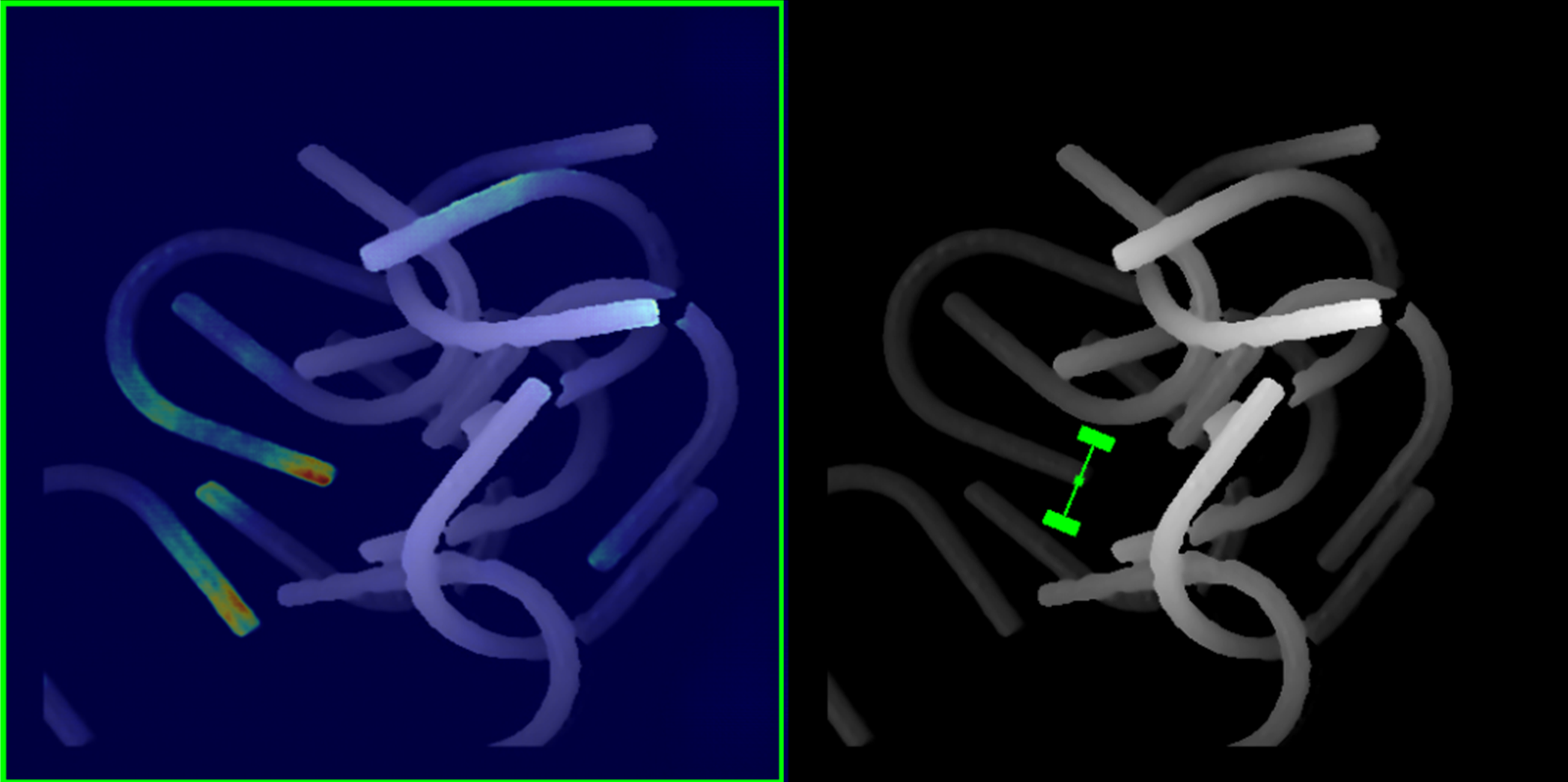

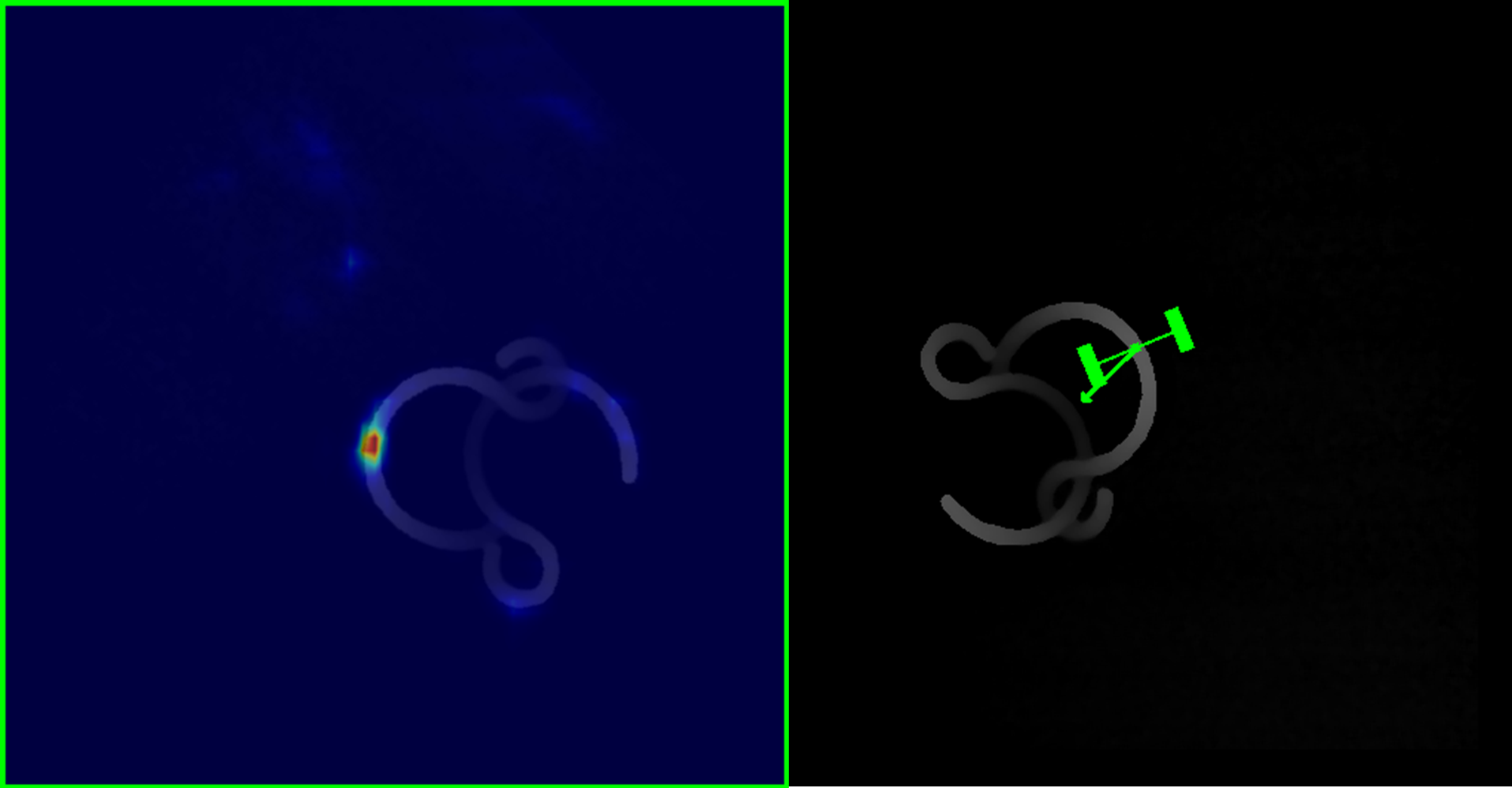

Results of planning pulling actions using PullNet

We rotate the input depth images for 8 angles representing 8 directions of pulling (pointing to the right). We select the one with the highest values among the PullNet outputs (PullMaps).

Data collection in simulation

Simulated picking demonstration using various objects

The following videos show the demonstrations for data collection in a phyics simulator.

The following figures shows the ground-truth of PickNet/PullNet data.

Separation strategy 1: Dropping entangled objects in a buffer bin to separate.

The following videos show the robot dropping the entangled objects in the buffer bin.

Seen objects

Unseen objects

Separation stragegy 2: Pulling to disentangle various objects.

The following videos show the robot untangles the objects by pulling combined with wiggling.

Seen objects

Unseen objects

Video: PullNet can plan pulling actions for various patterns of entanglement.

We test PullNet using different patterns of the entanglement in the buffer bin.

Seen object

Unseen object

Failure modes and limitations

Grasp failure

Challenging entanglmenet patterns

Unsuitable object shapes

Acknowledgements

This work is supported by Toyota Motor Coorporation.